Adapted from the PubCon Austin 2023 presentation, A Deep Dive Into Google’s Latest Algorithm Updates by Lily Ray, Sr. SEO Director and Head of Organic Research at Amsive Digital.

Jump to: Classifying Google Systems vs. Google Updates | Helpful Content System + The (Un)helpful Content Classifier | Who was impacted by the helpful content update? | What does unhelpful content look like? | A deeper dive into a network of sites affected by the helpful content system | What doesn’t work as far as helpful content is concerned? | December Update to the Google Search Quality Rater Guidelines | What does expertise vs. experience look like? | How Does AI Fit In? | What does this all mean for AI content?

The latest updates to AI have drastically changed the digital landscape and captured the public’s attention. These shifts are something that the SEO industry needs to be paying attention to and will definitely shift the course of how we approach our jobs and our careers. Don’t worry, we’ll get to talking about AI, but first, let’s take a look at the other Google updates to its ranking systems that require assessment and attention.

Classifying Google Systems vs. Google Updates

First and foremost, we learned in 2022 that Google refers to these changes as systems rather than updates. Many of the things we previously called Google ranking updates are actually systems — things like BERT, page experience, passage ranking, RankBrain, exact match domain, and more. On top of these, we also have updates to the systems. You might have something like the helpful content system or the passage ranking system, and then you’ll have updates to these systems.

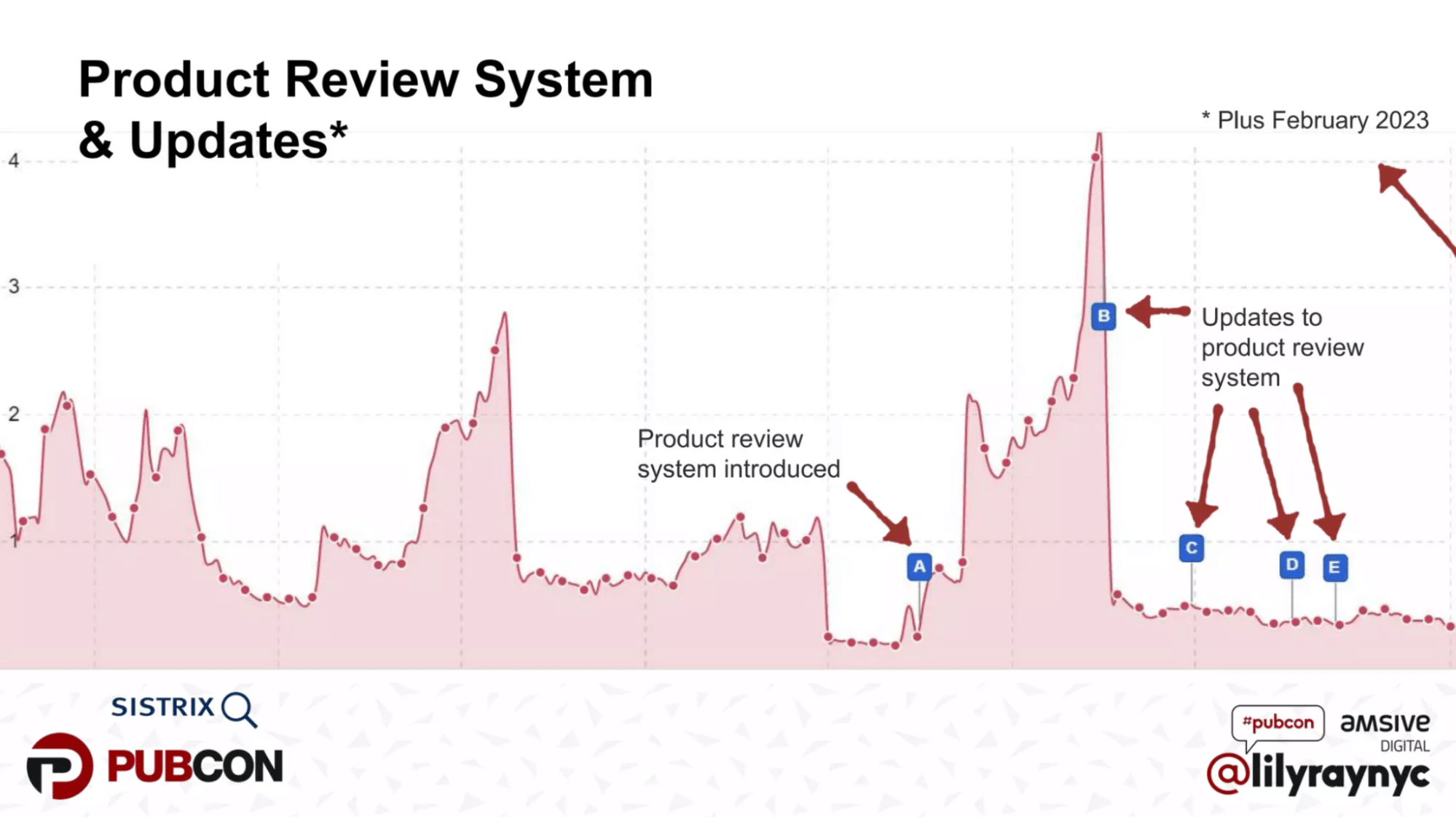

Take the product review system. So far, there have been six updates to this system, including one at the end of February 2023. Each time one of these updates goes live is when we have a high potential to see volatility around it.

Many of these systems are happening concurrently, so it’s important to get an understanding of which one is potentially affecting your site. Google now has an extremely helpful list of all its updates, including when they started and when they stopped.

Helpful Content System + The (Un)helpful Content Classifier

The helpful content system is particularly important to take a closer look at, especially with the new focus on AI. When this system was launched in August 2022, it really felt like it was going to be a big deal for search. The main takeaway is that Google wants to elevate content that was written by people for people. in particular, it seemed to emphasize reducing the visibility of content that appears to just be written to boost SEO rankings rather than provide valuable information and making sure that the published content feels authentic, original, and that it was written by actual people.

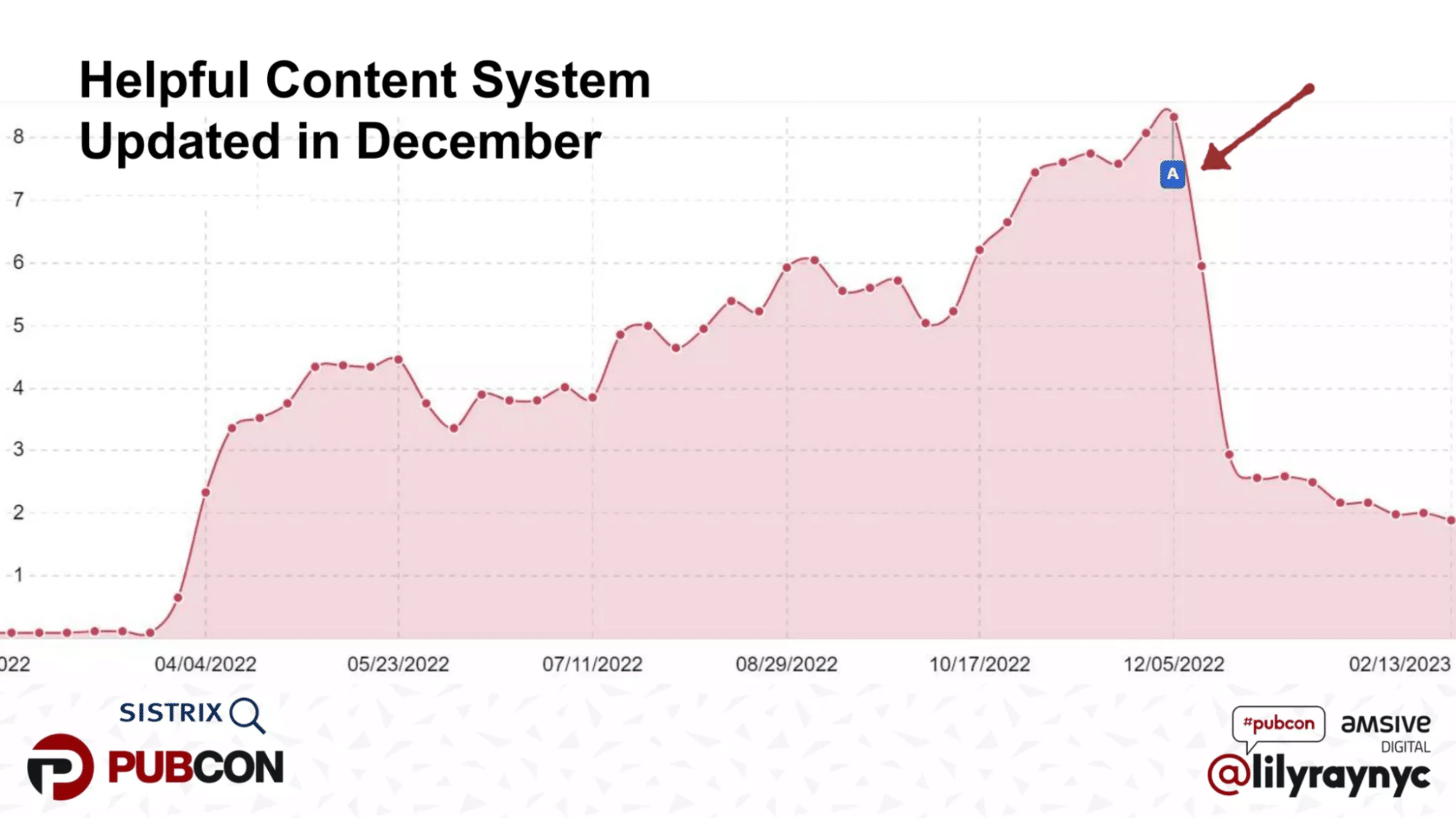

When this system was first rolled out, it wasn’t clear that it had a very big impact. During the initial window, it didn’t seem to have too widespread of an impact, but in December, we started to see a lot of sites feeling the impact.

An important thing to know is that the helpful content system created a new sitewide signal that we’ll call the unhelpful content classifier. According to Google, it’s in search of content with little value, low added value, and content that’s not particularly helpful. Essentially, this means that Google is looking for SEO spam.

The content primarily affected by this system is not the worst type of spam but more of the gray area of what can be considered SEO spam. If the majority of a site is unhelpful, even content that would be classified as helpful can still be impacted. No matter how insightful or informative that content may be, if Google considers the majority of your site’s content to be unhelpful, the entire site can feel a negative ranking impact.

This unhelpful content classified is automated and uses machine learning. Since it’s running continuously, your site can be hidden at any time. It’s not a manual action, so you won’t get a message from Google or Google Search Console alerting you to the impact. However, it can also be lifted at any time once the unhelpful content is removed.

Who was impacted by the helpful content update?

I’ve been looking into who was impacted since the system was initially rolled out, and some of the most impacted sites include:

- Lyric sites

- Adult sites

- Grammar sites

- Travel blogs

- Low-quality affiliate sites

- Product manuals (duplicate content)

- Aggregator sites

- Guitar chords

In many cases, the creators of these sites may think that they’re providing value because they’re putting out decent quality content and answers to user questions. However, there are so many sites already doing the same thing. How are these sites differentiating their content? How are they going above and beyond to prove to the user that they’re an authentic brand that’s adding a lot of value and original content? Google is looking for content that goes the extra mile to provide unique value for users.

What does unhelpful content look like?

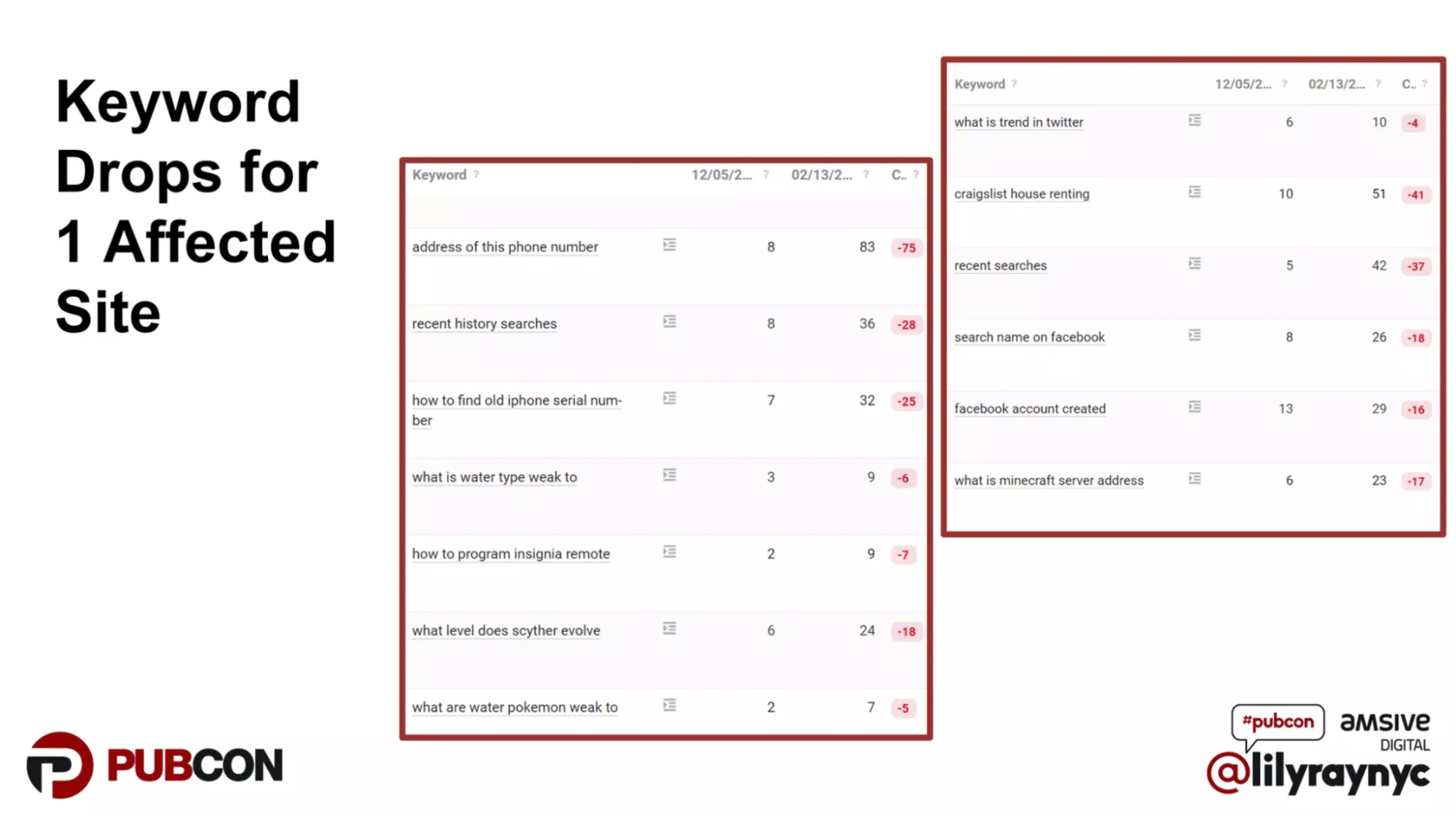

Here’s an example of the keywords from one site that was impacted by this system:

These are the types of keywords that they initially were ranking for before they fell to much lower positions on Google. As you can see, this site was ranking for a range of topics, including how to find an old iPhone serial number, what is a water-type Pokémon weak to, how to program an insignia remote, Craigslist house renting, what is a trend in Twitter — it’s all over the map, even if they’re all vaguely related to technology. It’s a lot of content that you can already get online, so it’s not super valuable, unique, or interesting. It’s mostly just rehashing what’s already been said elsewhere.

In these cases, Google is probably going to rank the authoritative sites that originally answered these questions. Without adding anything unique or original, sites like this are in dangerous territory of massive ranking hits.

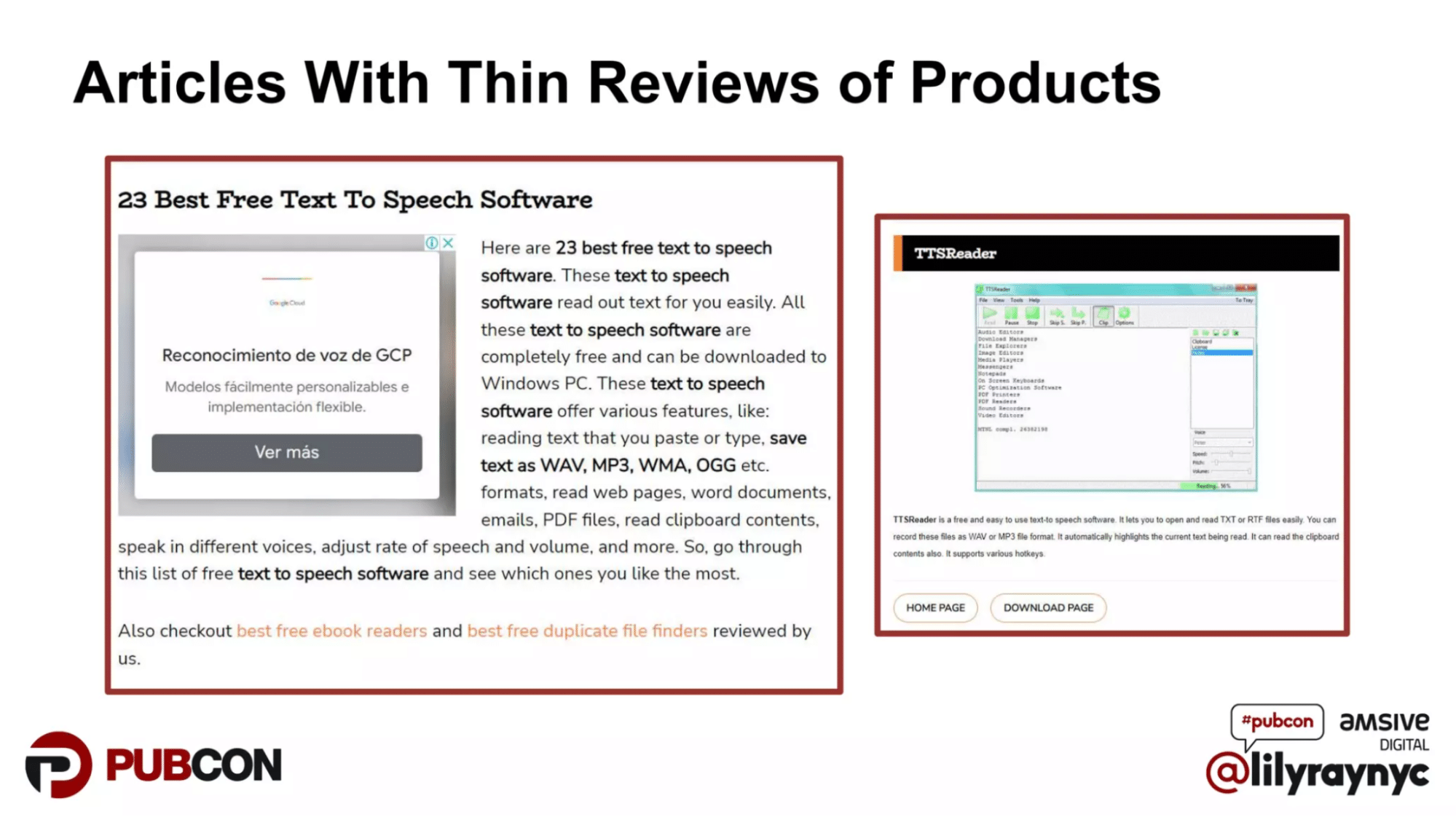

Articles with thin reviews of products are prime targets for this system. Something like ‘23 Best Free Test to Speech Software’ with things like SEO bolding, ads on the page, and affiliate links accompanying the thin reviews of the products aren’t really adding any unique value. While they may seem like decently helpful content, in reality, they’re just embedding some YouTube videos and adding a couple of sentences.

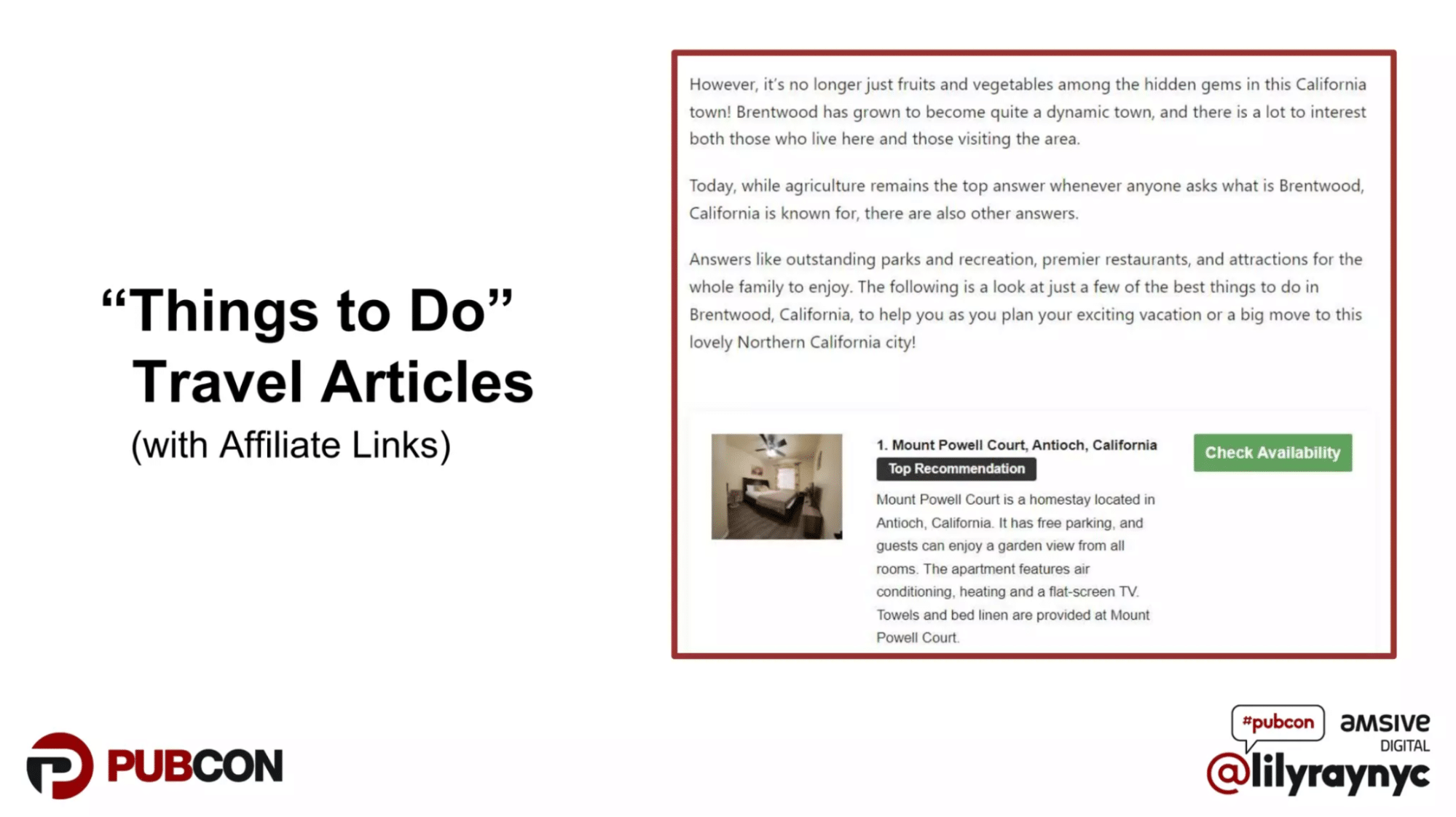

‘Things to Do’-style travel articles are also prime targets for the helpful content system to devalue. People have realized they can write a lot of travel content by hiring writers or by using AI to talk about different travel locations and include affiliate links to booking websites without saying anything particularly original. This is especially prevalent in the age of ChatGPT, where it’s possible for anyone to just churn out the same content about the best places to go in any city or town in the US.

When there’s no real evidence that the author has actually been to that location, taken photos, had a unique experience there, etc., these articles just aren’t entirely helpful. Google and other users are starting to be able to tell the difference between travel articles written with first-hand experience and articles written for the sake of posting another affiliate link.

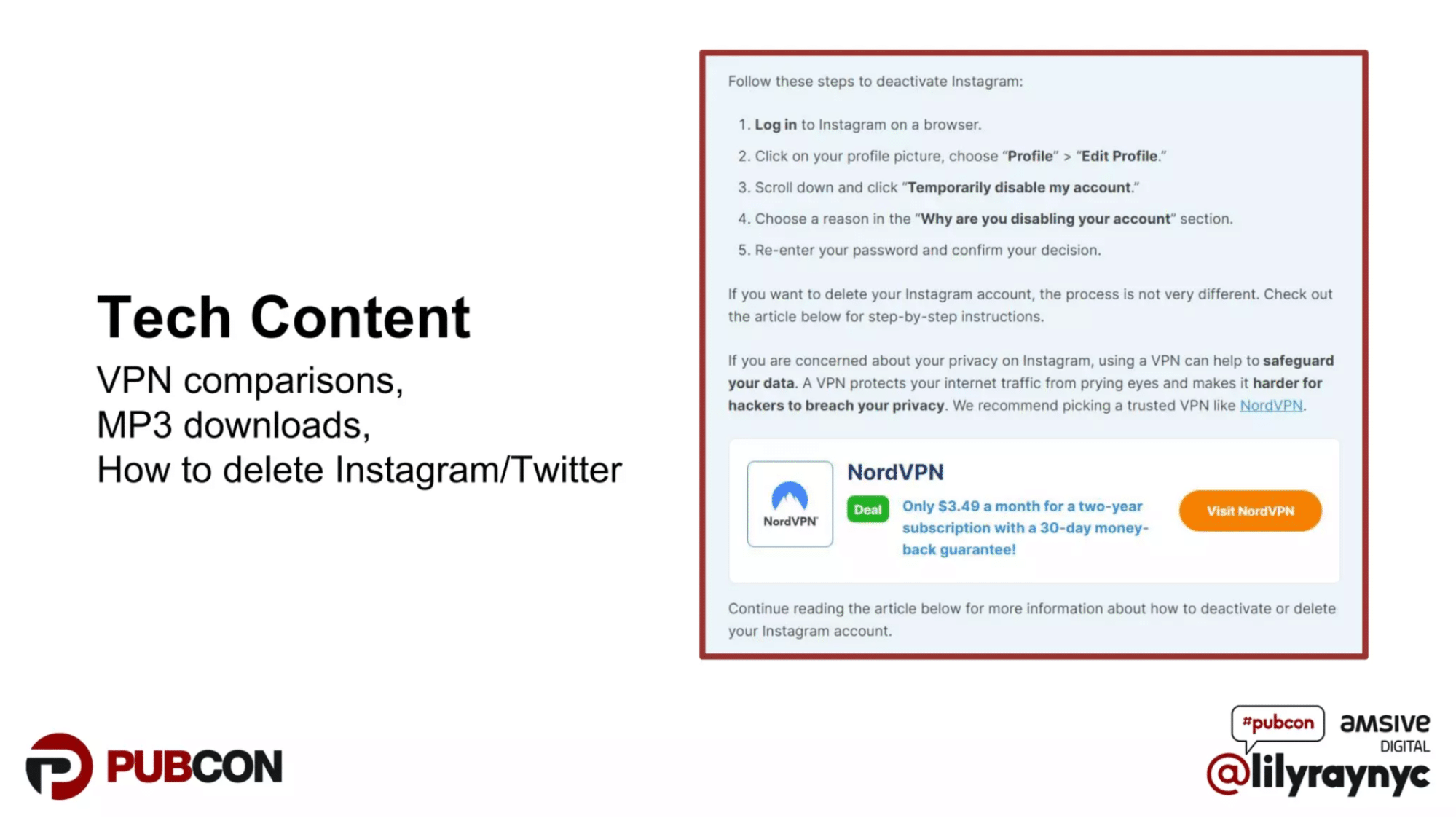

Content written with the clear intent of ranking to sell a product can also be hit with this system. Tech content saying something like, ‘This is how you delete your Instagram, now download our VPN if you’re concerned about privacy’ isn’t really something that’s on brand. It’s not offering a unique selling position, it’s just SEO content that falls right into the gray area of what could be considered spam.

A deeper dive into a network of sites affected by the helpful content system

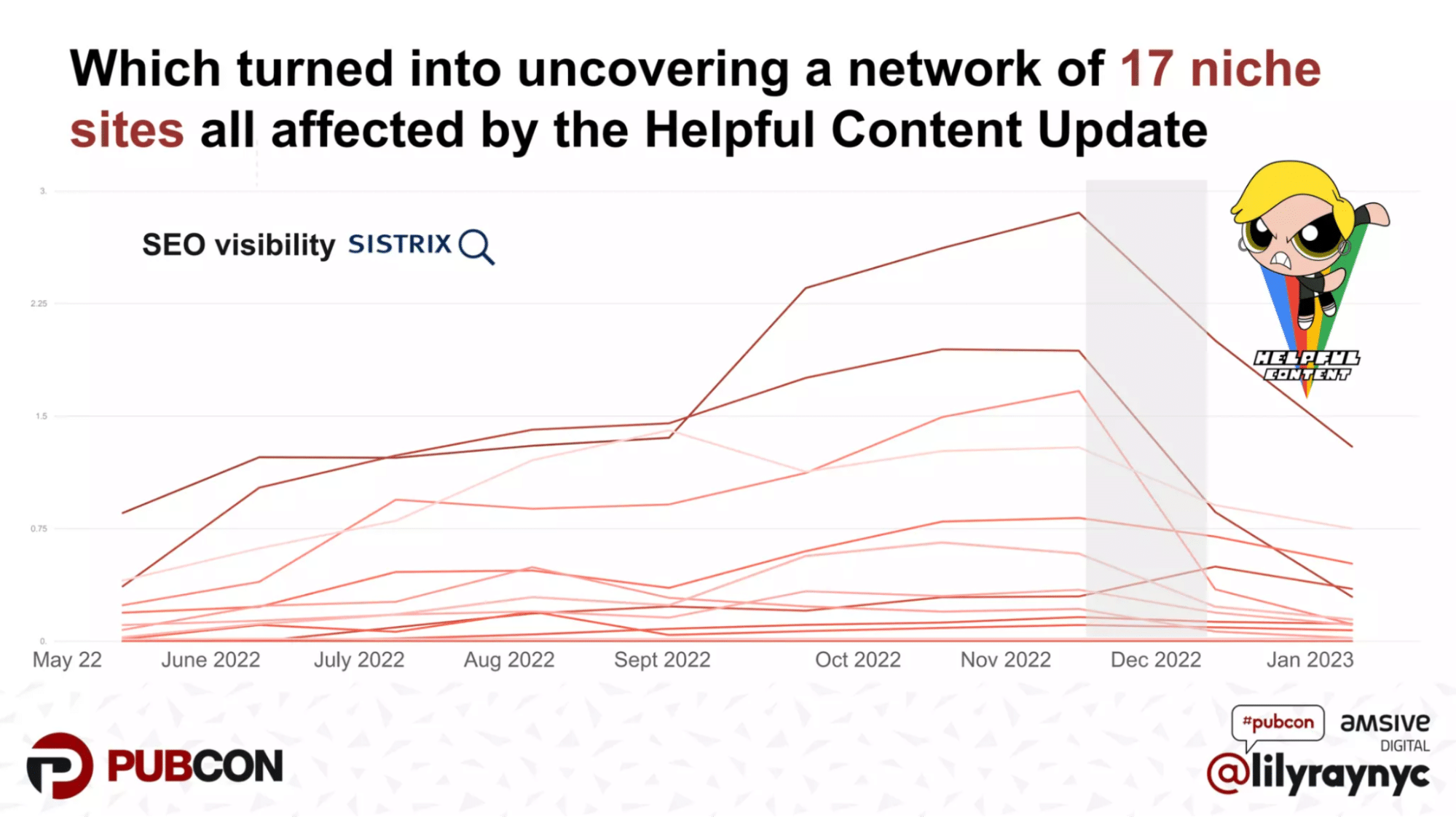

In December of 2022, I found a site that was affected by the system rolling out across Google. After digging into the site, I found that it was actually just 1 of 17 niche sites that were all affected by the helpful content system:

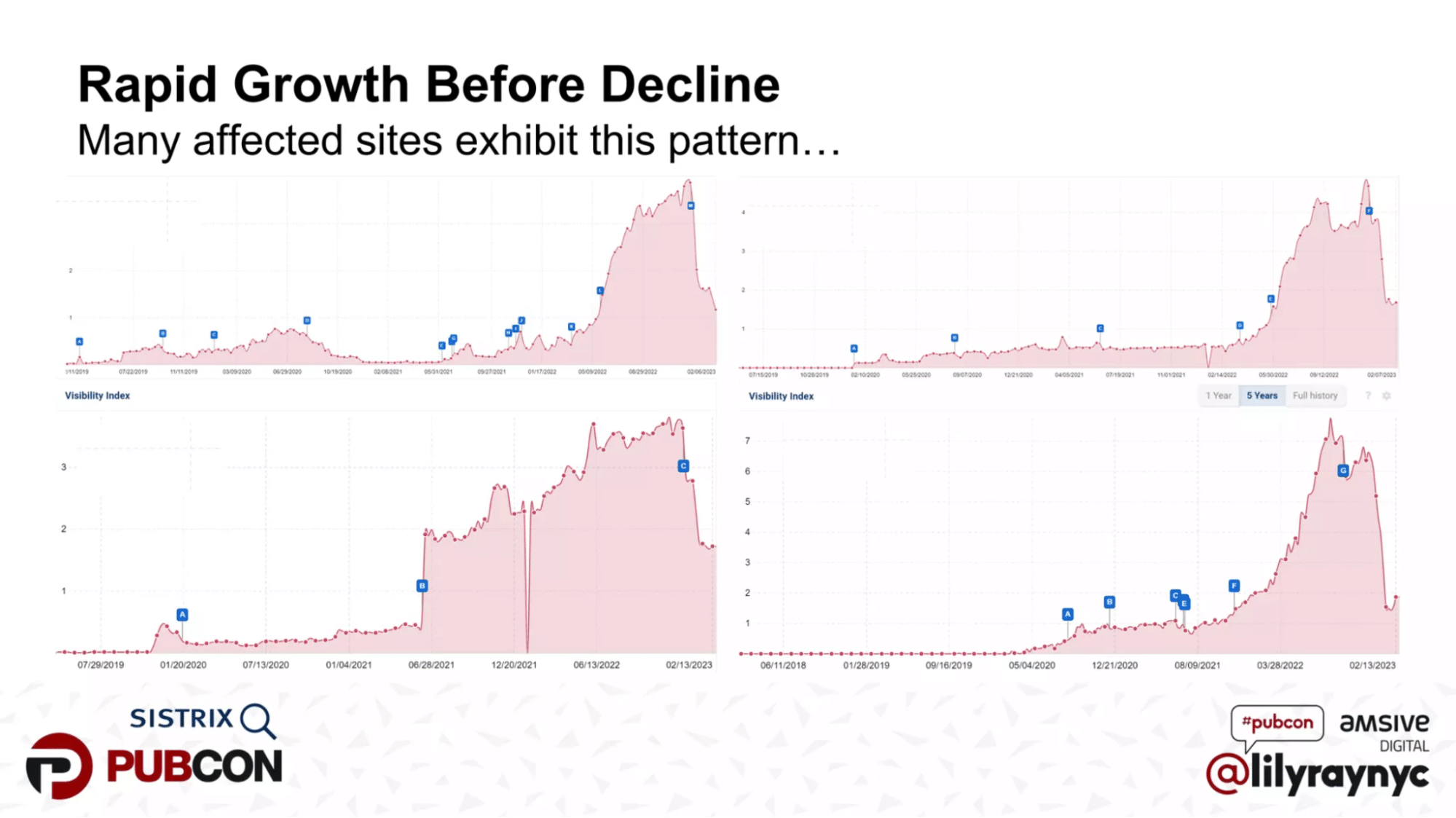

That gray section is when the December update rolled out. While all the sites in that network generally saw good growth leading up to it, they all suffered notable hits. All 17 of these sites followed a similar pattern. They all had 1000s of articles with mediocre content and were trying to cover every topic within their respective niches. However, they were all lacking real experience, expertise, authority, and trust.

Most of the articles started with some version of ‘What is…’, and ventured into topics outside of their wheelhouse. An art or music site would sometimes get into health topics, which isn’t a great practice. Staying in your core lane is much more effective, and venturing into vastly different topics tends to produce lackluster content.

There’s also excessive interlinking between sites in the network, they all primarily use stock photos, and there are aggressive ads and affiliate links. While these aren’t necessarily the causal factors of sites hit by the helpful content system, it is an indication of the qualities you’ll see across a lot of the affected sites.

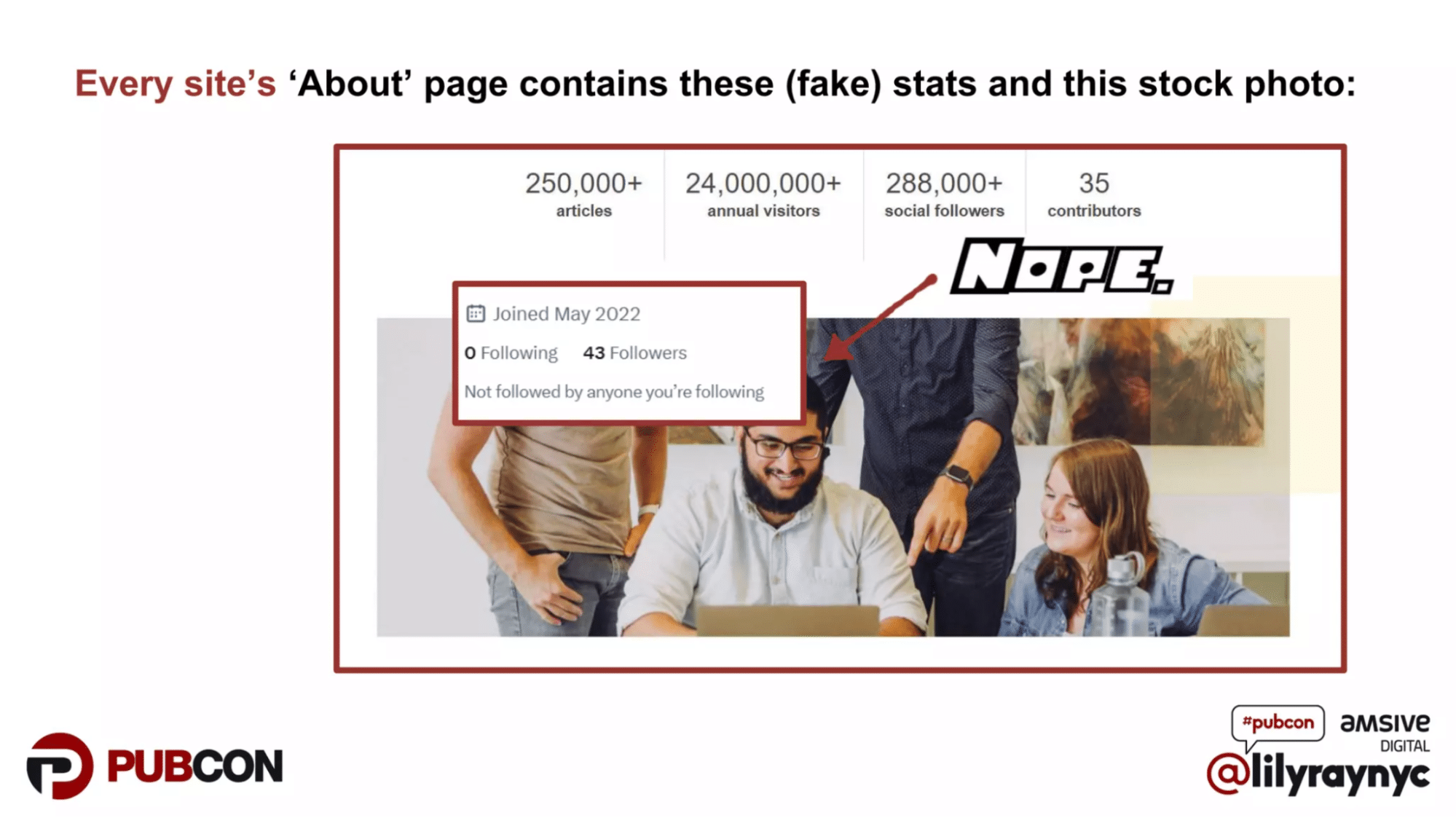

Not only are there a lot of fake E-E-A-T signals across these sites — markings of “fact checked” and possible fake authors attached to posts — the sites in this network are also generally lying about their readership statistics and have no real evidence that people are actually reading what they publish. Each about page is virtually the same, with minor differences, claiming to have 250,000+ articles, 24+ million visitors, and 288,000+ social followers. A quick check of their social media proves that these stats are just lies, which Google can easily verify as well.

While these sites have identified that ‘What is…?’ questions have high search volume, attempting to answer all of these questions with information scraped from other sources isn’t really the best approach. In these cases, the helpful content classifier is applied to the site, and the site is now considered unhelpful and can experience a ranking demotion across the board.

What doesn’t work as far as helpful content is concerned?

- Sites that pretend to be experts on too many different topics appear to have a problem after the helpful content system was rolled out.

- The information might be generic without unique or expert insights.

- The continent might lack detail or specificity.

- The content velocity and the topic selection seem to reflect an SEO-first approach.

- There are overwhelming ads and affiliate links. It’s important to note that this is correlation, not causation — it’s not necessarily the links themselves that are causing the helpful content system to kick in, but it’s a fact that many of the impacted sites appear to be using a lot of ads and a lot of affiliate links as the main source of revenue.

December Update to the Google Search Quality Rater Guidelines

There was a big update to the quality rater guidelines in late 2022. It wasn’t just the introduction of the new ‘E’ in E-E-A-T that I wrote about in Search Engine Land and that we’ve covered on the Amsive blog. A big takeaway here is that we have a new understanding of what was previously known as E-A-T with the introduction of ‘Experience’, and we also know that trust is at the center of the E-E-A-T family. ‘Expertise’, ‘Authoritativeness’, and ‘Experience’ can all lend themselves to the user trusting the website more.

What does expertise vs. experience look like?

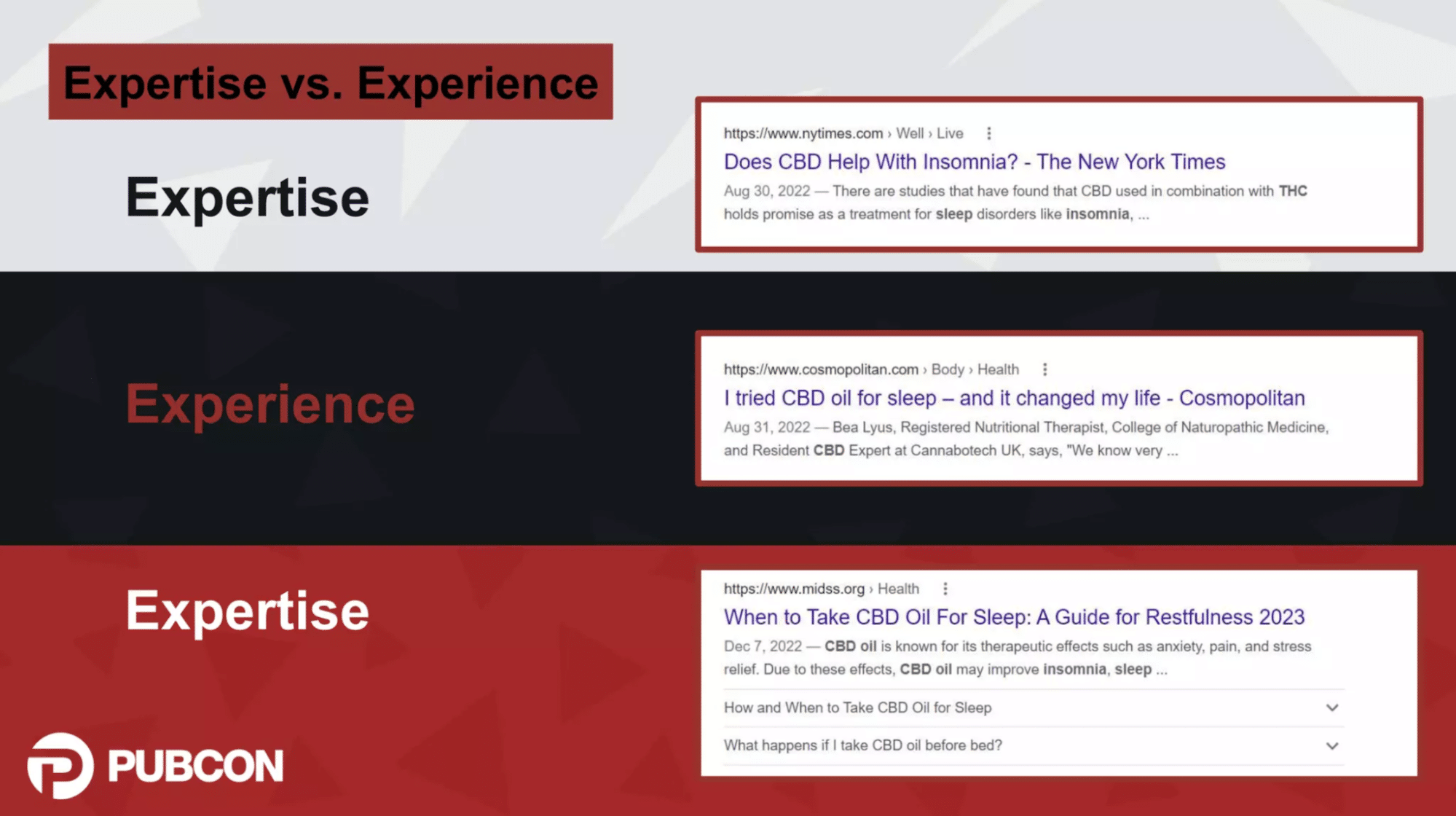

If you search for ‘does CBD help with sleep?’ an expert article might be from the New York Times saying that there are studies that have found a link between CBD and insomnia. A different kind of expert article might also say, ‘When to Take CBD Oil for Sleep.’ An article centered around experience might say, ‘I tried CBD oil for sleep — and it changed my life’. It’s that personal touch that lends itself to experience rather than expertise.

In Google’s quality rater guidelines, they talk about how you can be an expert on cancer by being an oncologist, but you can have experience with cancer by being a cancer survivor. There are different ways that both experience and expertise can lead to the user trusting your website, and they’re applied differently. In some cases, the user is looking for experience, in other cases, they’re looking for expertise, and sometimes they’re looking for both. Both can play into Google’s understanding of your site being high or low quality.

It’s important to understand that quality raters are busy. We’re waiting for Google to roll out new updates this year. People have talked about how one site doesn’t show expertise and it’s ranking just fine, or that another site has no experience and it’s ranking just fine, or another site launched a full AI campaign with no first-hand experience and it’s ranking just fine. Quality raters are using new criteria and a new rubric for how they evaluate content. Those tests and exams are happening right now as evaluations, and we haven’t seen the results of them just yet.

We haven’t seen a new core update roll out this year, and we’re kind of overdue for one. It won’t be surprising to see experience playing a larger role in how Google ranks content this year. We haven’t seen the full manifestation of the new experience just yet, so if you start to see these little anecdotes about how Google ranks all this content without experience, just wait. Google is probably trying to figure it out and is trying to separate good-quality content that was actually written by humans from other types of content that has no real first-hand experience.

How Does AI Fit In?

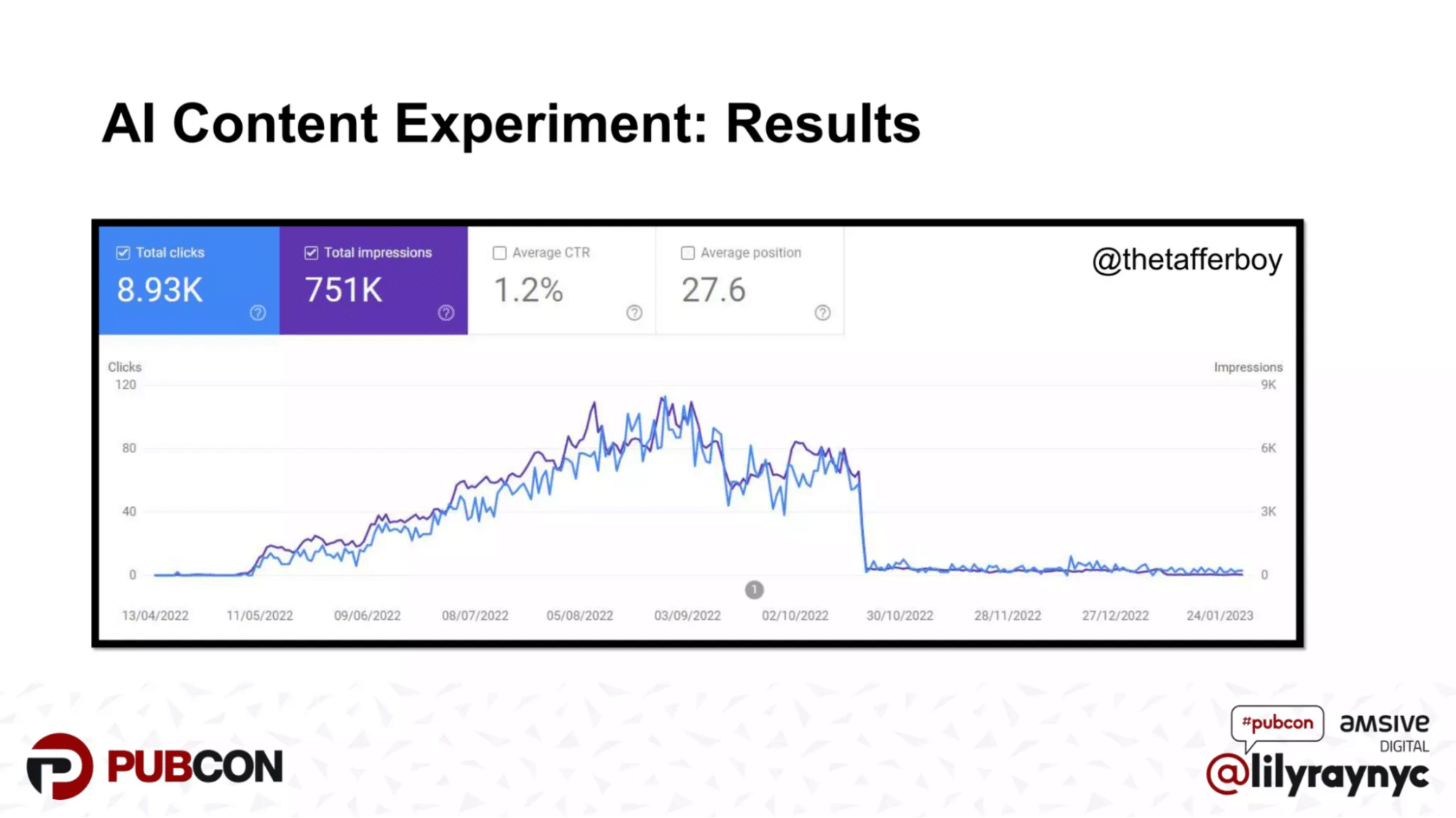

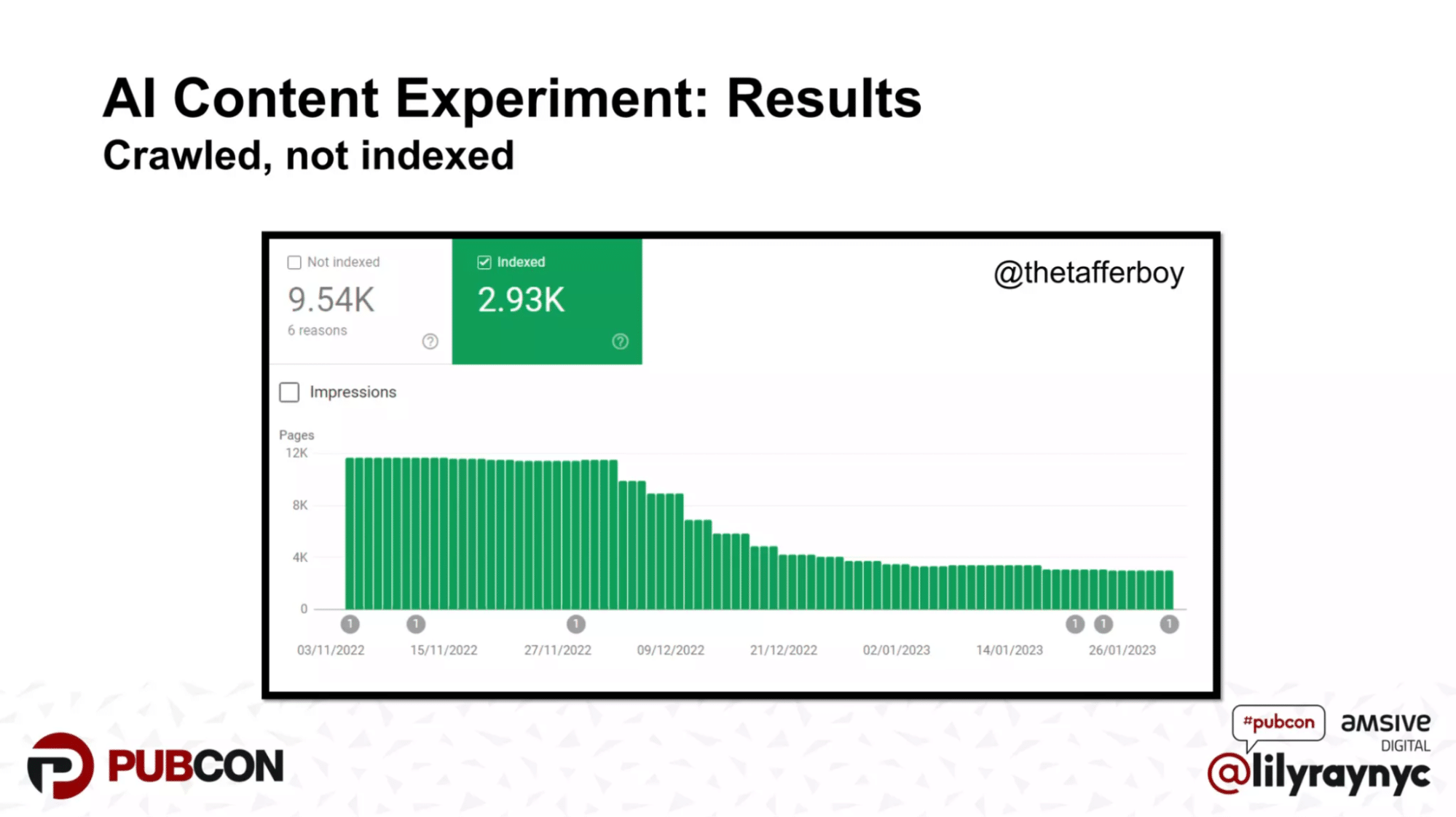

Last year, Mark Williams-Cook, the founder of AlsoAsked published a brand new niche site as a test. He scraped relevant People Also Ask questions and used GPT3 — this was before GPT3.5 came out, so the tech was a bit older — to answer the questions across over 10,000 URLs, and added a few links from other sites.

It worked until it didn’t. The first dip was the initial rollout of the helpful content update in August, and then we have this really big dropoff in October where the site basically falls to zero. We also saw the number of indexed pages drop pretty significantly. These were being placed into the crawled – not indexed bucket in Google Search Console. This means that Google was crawling the pages but decided to no longer index them.

What does this all mean for AI content?

The key takeaway for this whole blog, for the helpful content system, for AI content, and for low-quality content, is that it works until it doesn’t. Just because we can get away with something now doesn’t mean that will remain the case forever. We don’t know what updates and systems Google will roll out this year. We don’t know if Google is going to bring the hammer down on AI content this year. We just don’t know yet.

However, we do finally know what Google has to say about it. They provided new documentation at the beginning of February about how the company feels about AI content. The takeaways are that AI content can be fine if:

- It is original and high-quality

- It demonstrates E-E-A-T

- It satisfies the Helpful Content System

- It doesn’t propagate misinformation

- It isn’t created with the primary purpose of manipulating search results

Following these steps likely requires that even AI-generated content needs to include humans to help it stand out from other sites that may be using similar tools to generate content. There is a strong chance that Google will be able to catch on to content mass-generated by these tools in their current forms, and it’s important to keep in mind that you need to be accountable for the AI content published to ensure your site isn’t spreading fake news.

There are three important caveats to consider as well:

- Google recommends listing author names for content when the reader might ask, “Who wrote this?”

- Google recommends disclosing to the reader when AI was used in the content creation process.

- Google recommends against listing AI as the “author” of the content.

This feels like a bit of a checkmate — Google wants you to list your author’s name, they want you to say when AI content was used, and they don’t want you to say AI was the author. Essentially, they’re asking real human authors to reveal if and when AI was used.

Google’s also quietly getting better at understanding and knowing who authors are. There’s a lot that’s happening behind the scenes right now to elevate original content written by specific authors. If you google any journalist’s name at this point, you’re probably not only going to see a knowledge graph result but also a panel that shows recent articles they’ve written across different publications. This all indicates that Google has continued to lean more into individual expertise and understanding who authors are. For more information on how Google’s patents explain how Google can understand this aspect of writing online, take a look at my article in Search Engine Journal that digs into them.

The main takeaways from all of this are that helpful content requires effort and that taking shortcuts can catch up to you. Instead of focusing on scale, focus on content quality and E-E-A-T. Staying in your lane, invest in your authors’ online reputation, and build out their personal knowledge graphs because you can’t fake real firsthand experience.

See the slides from Pubcon 2023 presentation, “A Deep Dive into the Latest Google Updates” below.